We are delighted to announce Isovalent Enterprise for Cilium 1.14, introducing Cilium Multi-Network!

Isovalent Enterprise for Cilium is the hardened, enterprise-grade, and 24×7-supported version of the eBPF-based cloud networking platform Cilium. In addition to all features available in the open-source version of Cilium, the enterprise edition includes advanced networking, security, and observability features popular with enterprises and telco providers.

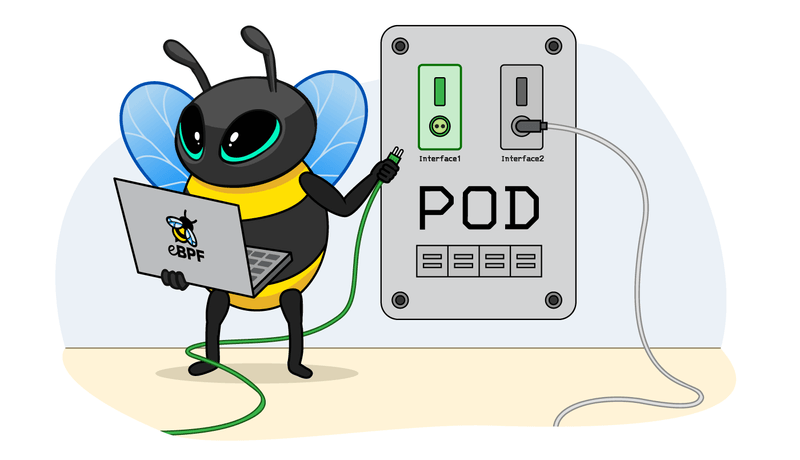

The highlight of this new enterprise release is undoubtedly native support for Multi-Network: the ability to connect a Kubernetes Pod to multiple network interfaces.

This is tremendously useful for a variety of use cases:

- Network Segmentation: Connecting Pods with multiple network interfaces can be used to segment network traffic. For example, you can have one interface for internal connectivity over a private network and another for external connectivity to the Internet.

- Multi-Tenancy: In a multi-tenant Kubernetes cluster, you can use Multi-Network alongside Cilium Network Policies to isolate network traffic between tenants by assigning different interfaces to different tenants or namespaces.

- Service Chaining: Service chaining is a network function virtualization (NFV) use case where multiple networking functions or services are applied to traffic as it flows to and from a Pod. Multi-Network can help set up the necessary network interfaces for these services.

- IoT (Internet of Things) and Edge Computing: For IoT and edge computing scenarios, Multi-Network can be used alongside Cilium Network Policies to impose network isolation on multi-tenant edge devices.

Importantly, Multi-Network with Isovalent Enterprise for Cilium is totally compatible with Cilium Network Policies and Hubble – meaning you don’t have to compromise on security and observability while using this feature!

Multi-Network Lab

In this lab, learn about the new Isovalent Enterprise for Cilium 1.14 feature - Multi-Network!

Start Multi-Network LabBut there’s more! Isovalent Enterprise for Cilium also includes all the features introduced in Cilium 1.14.

Let’s review some of the Cilium 1.14 highlights before diving into Multi-Network!

What is new in Cilium & Isovalent Enterprise for Cilium 1.14 ?

- Mutual Authentication: improve your security posture with zero effort (more details)

- Envoy DaemonSet: a new option to deploy Envoy as a DaemonSet instead of embedded inside the Cilium agent (more details)

- WireGuard Improvements: encryption with Cilium is getting better – you can now encrypt the traffic from node-to-node and also use Layer 7 policies alongside WireGuard (more details)

- Gateway API Update: our leading Gateway API implementation is updated with support for the latest Gateway API version, additional route type support and multiple labs (more details)

- L2 Announcements: Cilium can now natively advertise External IPs to local networks over Layer 2, reducing the need to install and manage tools such as MetalLB (more details)

- BGP Enhancements: introducing support for better operational tools and faster failover (more details)

- Multi-Pool IPAM: introducing support to allocate IPs to Pods from multiple IPAM pools. Multi-Pool IPAM is a requirement for the Multi-Network feature described below (more details)

- BIG TCP for IPv4: after the introduction of BIG TCP support for IPv6 in Cilium 1.13, here comes IPv4 support. Ready for a 50% throughput improvement? (more details)

What is Multi-Network (Beta) in Isovalent Enterprise for Cilium 1.14?

Kubernetes is built on the premise that a Pod should belong to a single network. While this approach may work for most use cases, enterprises and telcos often require a more sophisticated and flexible networking model for their advanced deployments.

There are many use cases where a Pod may require attachments to multiple networks with different properties via different interfaces. This is what Multi-Network in Isovalent Enterprise for Cilium 1.14 delivers.

Network Segmentation

In a typical Kubernetes environment, Pods will be attached to a single flat network, often with direct outbound Internet access. Certain organisations that operate within regulatory requirements often require more granularity. For example, there are cases where platform operators want certain Pods to have a public-facing interface to access the Internet and an internal private one. This becomes possible with Cilium Multi-Network.

It enables operators to build networking zones within their cluster to provide different levels of access and another method to enforce micro-segmentation.

Multi-Tenancy Isolation

Kubernetes environments are commonly shared by multiple organizations. Each tentant would share cluster resources and would be segregated using namespaces, resource quotas and limits, RBAC, and network policies.

Sometimes though, there’s a regulatory need to address a compliance requirement that segmentation not only happens in software but also in hardware.

Using Multi-Network alongside Cilium Network Policies, you can isolate network traffic between tenants by assigning different interfaces to tenants or namespaces.

Service Chaining

Most Telcos are adopting a Container Network Function (CNF) model where network functions are no longer enforced in hardware appliances but as virtual functions. With Cilium Multi-Network, operators can direct traffic to and from Pod to networks for service chaining.

Cilium Multi-Network Walkthrough

A Multi-Network model, where Pods have multiple network interfaces, imply the following requirements:

- Multiple pools of IPs the network interfaces can get their IPs from

- Multiple networks to which the network interfaces can be attached to

- Being able to apply different security policies depending on which network interface is being used

- Being able to observe the traffic using Hubble and discern between which network interface the traffic is coming from.

Let’s walk through an entire example on how Cilium Multi-Network addresses each requirement:

Multi-Pool IPAM and Multi-Network

Cilium 1.14 introduced the Multi-Pool IP Address Management (IPAM) mode, where multiple pools of IP ranges can be created.

With Multi-Pool IPAM, you can deploy a CiliumPodIPPool specifying the network range that will be allocated. The example below uses the 192.168.16.0/20 prefix.

The pool can then be attached directly to a network by using the IsovalentPodNetwork CRD. This CRD describes a virtual network a Pod can attach to.

When using the Multi-Network feature, you can use the network.v1alpha1.isovalent.com/pod-networks=secondary_network annotation to attach your Pod to secondary_network and get an IP address from the pool attached to the network (you will see more details about it shortly).

Let’s try:

The Pod has received an IP from the correct pool and network and as you can see below, the 192.168.16.32/27 network was carved out for the kind-worker2 node.

One of the benefits of Multi-Pool is that it can dynamically allocate more CIDR blocks as demand for IPs grows.

Let’s verify this with a Deployment of 60 Pods attached to this network:

Once deployed, you will see that an additional IP block – 192.168.16.64/27 – has been added to support the demand:

Note the needed field above: as the number of required IPs has significantly increased, another /27 CIDR was allocated to the Cilium Node.

The other field in the IsovalentPodNetwork manifest to notice is the following:

This adds a route to steer traffic towards the secondary network. Here is an illustration of the routing table once Multi-Network is configured.

With the network and pool configured, our Pod is ready to be attached to multiple networks.

Multi-Network Interfaces

To connect a Pod to multiple interfaces, we need to annotate them accordingly.

As you saw above, when we want to attach a Pod to additional networks, we use the network.v1alpha1.isovalent.com/pod-networks annotation.

This annotation consists of a comma-delimited list of IsovalentPodNetwork names. For example, the annotation network.v1alpha1.isovalent.com/pod-networks: default,jupiter causes Cilium to attach the client Pod to the default and jupiter networks.

Let’s deploy three Pods, with three different annotations:

To summarize:

server-defaultwill be connected to thedefaultnetworkserver-jupiterwill be attached only to thejupiternetwork.clientPod will be attached to both.

Let’s verify that connectivity is working. First, let’s check our Pods:

Note that, from this output, you can only see the primary IP address attached to client.

Next, let’s have a look at the Cilium Endpoints. All application containers which share a common IP address are considered a single Cilium Endpoint. All endpoints are assigned a security identity.

Let’s now verify that a CiliumEndpoint resource is present for each server Pod, and two CiliumEndpoint resources are present for the client Pod:

The client endpoint represents the primary Pod interface and the client-cil1 endpoint represents the secondary Pod interface of the client Pod. Both server Pods only have a single endpoint because they are each only attached to a single network.

Let’s verify that a secondary network interface cil1 was created in the client Pod, connecting it to the jupiter network:

Note the cil1 IP (192.168.16.7 in the example above) matches the client-cil1 interface IP address in the Cilium endpoint list above.

Next, let’s validate that the server is reachable by sending an HTTP GET request to the server’s /client-ip endpoint. In its reply, it will report the client’s IP address:

Finally, let’s validate connectivity in the jupiter network (192.168.16.0/20) from the multi-networked client pod to the server-jupiter pod:

Connectivity was successful. The responses to the packets sent from client to both servers Pods show that the original packets were sent from a different IP. The source IP wasn’t masqueraded and remained that of the original Pod and it originated from the Pod’s correct network interface. HTTP traffic tests were both successful.

But can we apply different network policies to these different network interfaces?

Let’s find out.

Multi-Network with Network Policies

Security enforcement architectures have been traditionally based on IP address filters. Given the churn and scale of micro-services, it’s largely irrelevant in the cloud-native world. To avoid these complications, which can limit scalability and flexibility, Cilium entirely separates security from network addressing. Instead, security is based on the identity of a pod, which is derived through labels.

Let’s have a look at the identity of the client endpoint. Its ID is 32716:

Let’s now have a look at the identity of the client-cil1 endpoint – in other words, the secondary interface on the same client Pod. Its ID is 1640:

Both IDs are different, and there different network policies can be applied to each network interface.

Every Cilium Endpoint associated with a secondary pod interface has one additional label of the form cni:com.isovalent.v1alpha1.network.attachment=<network name> added by the Cilium CNI plugin.

This is how each network interface will have its own Cilium security identity, allowing us to write policies that only apply to a specific network by selecting this extra label.

Let’s review a couple of Cilium Network Policies:

The server-default-policy network policy allows traffic from the client Pod to server-default and only HTTP traffic of the method GET and to "/client-ip" path.

The server-jupiter-policy network policy allows traffic from endpoints in the jupiter network to server-jupiter and only HTTP traffic of the method GET and to "/public" path.

Therefore, doing a curl test from client to the servers would result in the following results:

From client | /client-ip | /public |

To server-default | Forwarded | Denied |

To server-jupiter | Denied | Forwarded |

Let’s verify:

What this simple example highlights is that you can apply different network policies to your network interfaces!

Multi-Network with Isovalent Enterprise for Cilium 1.14 can be secured with granular network policies. But how can you observe the resulting traffic and quickly identify whether traffic was dropped or forwarded by a specific policy? By using Hubble, of course!

Multi-Network Observability with Hubble

If you are not familiar with the Hubble observability tool, check out the Hubble Series and download the Hubble Cheatsheet.

Using the hubble CLI, you can see all the requests from the client Pod – across both network interfaces it’s connected to:

You can filter on this criteria with the --verdict flag, for example, executing:

If you want to see traffic coming from a particular interface, you can use the identity assigned to the endpoint of the interface. In the example above, 28437 is the ID of the client and 1613 is the ID of the secondary interface (client-cil1).

Let’s extract the identity of the client and client-cli1 endpoints and assign them to environment variables:

Filter to the interface of your choice with:

You can also filter based on HTTP attributes, like the HTTP status code. For example, you can find all successful responses with this command:

As you see, Multi-Network with Isovalent Enterprise for Cilium 1.14 works seamlessly with other Cilium features such as security and observability!

Multi-Network Status and Roadmap

While we are excited about the Multi-Network feature in Isovalent Enterprise for Cilium 1.14 – and I hope you are too! – it is a beta release and we plan to support more use cases in the future. We look forward to hearing from users on use cases it can unlock for you!

In future releases, we will be including IPv6 support for Multi-Network, integration with Segment Routing version 6 (SRv6 support was added in the previous Isovalent Enterprise for Cilium release), and much more!

We will also keep a close eye on the Multi-Network Kubernetes Enhancement Proposal (KEP) as it matures.

Core Isovalent Enterprise for Cilium features

Advanced Networking Capabilities

Isovalent Enterprise for Cilium 1.13 introduced a set of advanced routing and connectivity features popular with large enterprises and telco, including SRv6 L3 VPN support, Overlapping PodCIDR support for Cluster Mesh, Phantom Services for Cluster Mesh and much more.

Platform Observability

Isovalent Enterprise for Cilium includes Role-Based Access Control (RBAC) for platform teams to let users access dashboards relevant to their namespaces, applications, and environments.

This enables application teams to have self-service access to their Hubble UI Enterprise interface and troubleshoot application connectivity issues without involving SREs in the process.

Zero Trust Visibility Lab

Creating the right Network Policies can be difficult. In this lab, you will use Hubble metrics to build a Network Policy Verdict dashboard in Grafana showing which flows need to be allowed in your policy approach.

Start Zero Trust Visibility LabForensics and Auditing

From the Hubble UI Enterprise, operators have the ability to create network policies based on actual cluster traffic.

Isovalent Enterprise for Cilium also includes Hubble Timescape – a time machine for observability data with powerful analytics capabilities.

While Hubble only includes real-time info, Hubble Timescape is an observability and analytics platform capable of storing & querying observability data that Cilium and Hubble collect.

Isovalent Enterprise for Cilium also includes the ability to export logs to SIEM (Security Information and Event Management) platforms such as Splunk or an ELK (Elasticsearch, Logstash, and Kibana) stack.

To explore some of these enterprise features, check out some of the labs:

Network Policies Lab

Create Network Policies based on actual cluster traffic!

Start Network Policies LabConnectivity Visibility with Hubble Lab

Visualize label-aware, DNS-aware, and API-aware network connectivity within a Kubernetes environment!

Start Connectivity Visibility with Hubble LabAdvanced Security Capabilities via Tetragon

Tetragon provides advanced security capabilities such as protocol enforcement, IP and port whitelisting, and automatic application-aware policy generation to protect against the most sophisticated threats.

To explore some of the Tetragon enterprise features, check out some of the labs:

Security Visibility Lab

In this lab, you will simulate the exploitation of a nodejs application, with the attacker spawning a reverse shell inside of a container and moving laterally within the Kubernetes environment. In the lab, learn how Tetragon Enterprise can trace the Lateral Movement and Data Exfiltration of the attacker post-exploit.

Start Security Visibility LabTLS Visibility Lab

In this lab, learn how you can use Isovalent Enterprise for Cilium to inspect TLS traffic and identify the version of TLS being used. You will also learn how to report to export events in JSON format to SIEM.

Start TLS Visibility LabEnterprise-grade Resilience

Isovalent Enterprise for Cilium includes capabilities for organizations that require the highest level of availability. This includes features such as High Availability for DNS-aware network policy (video) and High Availability for the Cilium Egress Gateway (video).

Enterprise-grade Support

Last but certainly not least, Isovalent Enterprise for Cilium includes enterprise-grade support from Isovalent’s experienced team of experts, ensuring that any issues are resolved promptly and efficiently. Customers also benefit from the help and training from professional services to deploy and manage Cilium in production environments.

Learn More!

If you’d like to learn more about Isovalent Enterprise for Cilium 1.14 and related topics, check out the following links:

- Join the 1.14 release webinar – with Thomas Graf, Co-Creator of Cilium, CTO, and Co-Founder of Isovalent, to learn more about the latest and greatest open source and enterprise features of Isovalent Enterprise for Cilium and Cilium 1.14.

- Request a Demo – Schedule a demo session with an Isovalent Solution Architect.

- Read more about the Cilium 1.14 release – Effortless Mutual Authentication, Service Mesh, Networking Beyond Kubernetes, High-Scale Multi-Cluster, and Much More.

- Learn more about Isovalent & Cilium at our resource library – It lists guides, tutorials, and interactive labs.

Feature Status

Here is a brief definition of the feature maturity levels used in this blog post:

- Stable: A feature that is appropriate for production use in a variety of supported configurations due to significant hardening from testing and use.

- Limited: A feature that is appropriate for production use only in specific scenarios and in close consultation with the Isovalent team.

- Beta: A feature that is not appropriate for production use, but where user testing and feedback is requested. Customers should contact Isovalent support before considering Beta features.

Prior to joining Isovalent, Nico worked in many different roles – operations and support, design and architecture, technical pre- sales – at companies such as HashiCorp, VMware, and Cisco.

In his current role, Nico focuses primarily on creating content to make networking a more approachable field and regularly speaks at events like KubeCon, VMworld, and Cisco Live.