The Cilium project is a hive of activity, and at Isovalent we’re proud to be at the heart of it. Cilium 1.11 was released a couple of days ago, and it’s an exciting release with many new features. There’s also a new beta program for trying out Cilium Service Mesh capabilities. In this post, we’ll dive into many of these new features, and also look at the Isovalent Cilium Enterprise 1.11 release.

Service Mesh Beta Program

Before we talk about the 1.11 release, let’s mention the beta program that was announced by the Cilium community allowing users to try out the new capabilities coming in Cilium Service Mesh.

- eBPF-based Service Mesh (Beta) New service mesh capabilities including L7 traffic management & load-balancing, TLS termination, canary rollouts, tracing, and more

- Integrated Kubernetes Ingress (Beta) Support for Kubernetes Ingress implemented with a combination of eBPF and Envoy

The Cilium site has an article with more details about the beta program, and how you can participate. These features are part of the open source Cilium project but they’re being developed in a separate feature branch, to allow for testing, feedback and changes before they land in the main Cilium codebase for release 1.12 early in 2022.

Cilium 1.11

The latest release of Cilium 1.11 includes extra features for Kubernetes and standalone load-balancer deployments.

- OpenTelemetry Support: Ability to export Hubble’s L3-L7 observability data in OpenTelemetry tracing and metrics format. (More details)

- Kubernetes APIServer Policy Matching: New policy entity for hassle-free policy modeling of communication from/to the Kubernetes API server. (More details)

- Topology Aware Routing: Enhanced load-balancing with support for topology-aware hints to route traffic to the closest endpoint, or to keep traffic within a region. (More details)

- BGP Pod CIDR Announcement: Advertise PodCIDR IP routes to your network using BGP (More details)

- Graceful Service Backend Termination: Support for graceful connection termination in order to drain network traffic when load-balancing to pods that are being terminated. (More details)

- Host Firewall Promotion: Host firewall functionality has been promoted to stable and is ready for production use (More details)

- Improved Load Balancer Scalability: Cilium load balancing now supports more than 64K backend endpoints. (More details)

- Improved Load Balancer Device Support: The accelerated XDP fast-path for load-balancing can now be used with bonded devices (More details) and more generally also in multi-device setups. (More details)

- Istio Support with Kube-Proxy-Replacement: Cilium’s kube-proxy replacement mode is now compatible with Istio sidecar deployments. (More details)

- Egress Gateway Improvements: Enhancements to the egress gateway functionality, including support for additional datapath modes. (More details)

- Managed IPv4/IPv6 Neighbor Discovery: Extensions to both the Linux kernel as well as Cilium’s load-balancer in order to remove its internal ARP library and delegate the next hop discovery for IPv4 and now also IPv6 nodes to the kernel. (More details)

- Route-based Device Detection: Improved user experience for multi-device setups with Cilium through route-based auto-detection of external-facing network devices. (More details)

- Kubernetes Cgroup Enhancements: Enhancements to Cilium’s kube-proxy replacement integration for runtimes operating in pure cgroup v2 mode as well as Linux kernel improvements for Kubernetes mixed mode cgroup v1/v2 environments. (More details)

- Cilium Endpoint Slices: Cilium is now more efficient in CRD mode with its control-plane interactions with Kubernetes, enabling 1000+ node scalability in a way that previously required a dedicated Etcd instance to manage. (More details)

- Mirantis Kubernetes Engine Integration: Support for Mirantis Kubernetes Engine. (More details)

Isovalent Cilium Enterprise 1.11

Alongside our investment in the open source Cilium project, we have been adding new capabilities to Isovalent’s enterprise-ready distribution.

- Timescape (Beta, Enterprise): New ClickHouse-based analytics platform to persistently store Hubble observability data over time. (More details)

- HA for FQDN & Egress IP (Enterprise): Initial support to allow an egress NAT policy to select a group of gateway nodes instead of a single one. (More details)

- eBPF-based high-performance L7 Tracing & Metrics (Enterprise): New enhanced proxy-free L7 visibility mode for HTTP, gRPC, DNS, and TLS, allowing export of tracing, flow logs, and metrics at minimal overhead. (More Details)

Features marked with (Enterprise) are available in Isovalent Cilium Enterprise. Features marked with (Beta) are available for testing and should not be used in production without prior testing.

What is Cilium?

Cilium is open source software for transparently providing and securing the network and API connectivity between application services deployed using Linux container management platforms such as Kubernetes.

At the foundation of Cilium is a new Linux kernel technology called eBPF, which enables the dynamic insertion of powerful security, visibility, and networking control logic within Linux itself. eBPF is utilized to provide functionality such as multi-cluster routing, load balancing to replace kube-proxy, transparent encryption as well as network and service security. Besides providing traditional network-level security, the flexibility of eBPF enables security with the context of application protocols and DNS requests/responses. Cilium is tightly integrated with Envoy and provides an extension framework based on Go. Because eBPF runs inside the Linux kernel, all Cilium functionality can be applied without any changes to the application code or container configuration.

See the section [Introduction to Cilium] for a more detailed general introduction to Cilium.

OpenTelemetry Support

As part of this release, support for OpenTelemetry has been added.

OpenTelemetry is a CNCF project that defines a telemetry protocol and data formats, it covers distributed tracing, metrics and logs. The project provides an SDK as well as a collector component that can run on Kubernetes. Typically, an application exposes OpenTelemetry data through direct instrumentation that is most often implemented in-app using OpenTelemetry SDK. An OpenTelemetry collector is used to gather data from various applications in a cluster, and send it to one or more backends. Jaeger (another CNCF project) is one of the backends that can be used for storage and presentation of trace data.

Hubble adapter for OpenTelemetry is an add-on component that can be deployed into a cluster running Cilium (1.11 is the best, of course, but it should work with older releases too). The adapter presents itself as a custom OpenTelemetry collector that embeds a Hubble receiver. We recommend to using the OpenTelemetry operator for deployment (see user guide for details). The adapter reads flow data from Hubble and can convert it to trace data as well as log data.

Adding this adapter to an OpenTelemetry-enabled cluster will provide valuable insight into network events along the side with application-level telemetry. Correlation of HTTP flows and spans produced by the OpenTelemetry SDK is already possible with this release.

Topology Aware Load-Balancing

It is common to deploy Kubernetes clusters spanning multiple data centers or availability zones. This brings not only benefits such as high-availability, but also some operational complications along with it.

Until recently, Kubernetes did not have a built-in construct which could help to describe the location of Kubernetes service endpoints as in the “same topology level”. This meant that as a part of a service load-balancing decision, Kubernetes nodes could select endpoints residing in different availability zones than a client requesting the service. The side effects of this could, for example, be increased cloud bills (usually due to traffic crossing multiple availability zones is surcharged by cloud providers) or increased latency for these requests. More broadly, the locality is defined entirely by the user and can be any topology-related subject: for example, the service traffic should be load-balanced among endpoints in the same node, same rack, same failure zone, same failure region, same cloud provider, and so on.

Kubernetes v1.21 introduced a feature called Topology Aware Routing to address this limitation. It sets hints for endpoints within an EndpointSlice object of a service which has the service.kubernetes.io/topology-aware-hints annotation set to auto. The hints contain a zone name in which an endpoint runs, and they are taken from the node’s topology.kubernetes.io/zone label. Two nodes are considered in the same topology level if they have the same value for the zone label.

The hints are consumed by Cilium’s kube-proxy replacement that is filtering the endpoints it routes to based on the hints set by the EndpointSlice controller, which eventually results in the load-balancer preferring the endpoints from the same zone.

The Kubernetes feature is currently in alpha, and thus requires the feature gate to be enabled. Please refer to the official documentation for more information.

Kubernetes APIServer Policy Matching

In managed Kubernetes environments like GKE, EKS, and AKS, the IP address of the kube-apiserver is opaque. In previous versions of Cilium, there was no canonical way to write Cilium Network Policies to allow traffic to the kube-apiserver. It involved knowing a few implementation details around Cilium security identity allocation and where the kube-apiserver was deployed (within or outside the cluster).

New in 1.11, Cilium now understands these nuances and provides users a way to allow traffic with the dedicated apiserver policy primitive. Under the hood, this primitive is the entities selector that understands the meaning of the reserved kube-apiserver label, which is automatically applied to any IP

addresses associated with the kube-apiserver.

In unmanaged Kubernetes clusters, the kube-apiserver entity can be used in both ingress and egress directions from a pod’s perspective.

In managed clusters (such as GKE, AKS and EKS), the kube-apiserver entity can be used in the egress direction from a pod to the api-server.

Security teams will be particularly interested in this new feature as it provides a simple primitive to define Cilium Network Policy for pods to allow or disallow reachability to the kube-apiserver. The following policy snippet allows all Cilium Endpoints in the kube-system namespace to reach the kube-apiserver, while all Cilium Endpoints outside that namespace will be denied access if they are in default-deny.

BGP Pod CIDR Announcements

With an increased focus for on-prem Kubernetes environments, there is also desire to better integrate into the existing data center network infrastructure which is typically distributing routes through BGP. We initially started out with the BGP integration for the Cilium agent in the last release by supporting the announcement of LoadBalancer service VIPs via BGP to their BGP routers.

The Cilium 1.11 release now additionally introduces the ability to announce Kubernetes Pod subnets via BGP. Cilium will create a BGP peer with any downstream BGP speaking infrastructure and advertises the subnet in which Pod IP addresses are allocated. From there, the downstream infrastructure can distribute those routes as they see fit, enabling the data center to route directly to Pods over various private/public hops.

To begin using this feature a BGP configuration map must be pushed to the Kubernetes nodes hosting Cilium:

Cilium should then be installed with the following flags:

Once Cilium is installed it will announce the Pod CIDR range to the BGP router at 192.168.1.11.

A full demonstration can be viewed below which was shown in our recent Cilium eCHO episode:

Learn more about how to configure both, the announcement of LoadBalancer IPs for Kubernetes services as well as a node’s Pod CIDR range via BGP at docs.cilium.io.

Managed IPv4/IPv6 Neighbor Discovery

When Cilium’s eBPF-based kube-proxy replacement is enabled, Cilium performs neighbor discovery of nodes in the cluster in order to gather L2 addresses of direct neighbors or next hops in the network. This is required for the service load-balancing to ensure reliable high rates of traffic up to several millions of packets per second which is supported by the eXpress Data Path (XDP) fast path. In this mode, dynamic on-demand resolution is technically not possible as it would require waiting for e.g. neighboring backends to get resolved.

In Cilium 1.10 and earlier, the agent itself contained an ARP resolution library where a controller triggered discovery and periodic refresh of new nodes joining the cluster. The manually resolved neighbor entries were pushed into the kernel and refreshed as PERMANENT entries where the eBPF load-balancer retrieves their addresses for directing traffic to backends. Aside from the lack of IPv6 neighbor resolution support in the agent’s ARP resolution library, PERMANENT neighbor entries have a number of issues: to name one, entries can become stale and the kernel refuses to learn address updates since they are static by nature, causing packets between nodes to be dropped in some circumstances. Moreover, tightly coupling the neighbor resolution into the agent also has the disadvantage that upon agent down/up cycles no learning of address updates can take place.

For Cilium 1.11, neighbor discovery has been fully reworked and the Cilium internal ARP resolution library has been removed entirely from the agent. The agent now relies on the Linux kernel to discover next hops or hosts in the same L2 domain. Both IPv4 and IPv6 neighbor discovery is now supported in Cilium. For v5.16 or newer kernels, we have upstreamed (part1, part2, part3) our “managed” neighbor entry work presented earlier at the BPF & Networking Summit we co-organized during the Linux Plumbers conference this year. In this case, the agent pushes down L3 addresses of new nodes joining the cluster and triggers the kernel to periodically auto-resolve their corresponding L2 addresses.

Such neighbor entries are pushed with “externally learned” and “managed” neighbor attributes into the kernel via netlink. While the former attribute ensures that those neighbor entries are not subject to the kernel’s garbage collector when under pressure, the “managed” attribute tells the kernel to automatically keep such neighbors in REACHABLE state if feasible. Meaning, if the node’s upper stack is not actively emitting or receiving traffic to respectively from backend nodes where the kernel could re-learn to keep the neighbor in REACHABLE state, then an explicit neighbor resolution is periodically triggered through an internal kernel workqueue. For older kernels which do not have the “managed” neighbor feature, an agent’s controller will periodically kick the kernel to trigger a new resolution if needed. Thus, there are no PERMANENT neighbor entries anymore from Cilium side and upon upgrade the agent will atomically migrate the former into dynamic neighbor entries where the kernel is able to learn address updates.

Additionally, in case of multipath routes to backends, the agent’s load-balancing now also takes failed next hops into consideration for its route lookup. Meaning, instead of alternating between all routes, failed path are avoided by taking neighboring subsystem information into account. Overall, for the Cilium agent, this rework significantly facilitates neighbor management and allows the datapath to be more resilient with regards to neighbor address changes of nodes or next hops in the network.

XDP Multi-Device Load-Balancer Support

Up until this release, the XDP-based load-balancer acceleration could only be enabled on a single networking device in order to operate as a hair-pinning load-balancer, (where forwarded packets leave through the same device they arrived on). The reason for this initial constraint which was added with the XDP-based kube-proxy replacement acceleration was due to limited driver support for multi-device forwarding under XDP (XDP_REDIRECT) whereas same-device forwarding (XDP_TX) is part of every driver’s initial XDP support in the Linux kernel.

This meant that environments with multiple networking devices had to use Cilium’s regular kube-proxy replacement for the tc eBPF layer. One typical example of such an environment is a host with two network devices: One of which faces a public network (e.g., accepting requests to a Kubernetes service from the outside), while the other one faces a private network (e.g., used for in-cluster communication among Kubernetes nodes).

Given nowadays on modern LTS Linux kernels the vast majority of 40G and 100G+ upstream NIC drivers support XDP_REDIRECT out of the box, this constraint can finally be lifted, thus this release implements load-balancing support among multiple network devices in the XDP layer for Cilium’s kube-proxy replacement as well as Cilium’s standalone load balancer, which allows keeping packet processing performance high also for more complex environments.

Transparent XDP Bonding Support

In a lot of on-prem, but also cloud environments nodes typically use a bonded device setup for dual-port NICs facing external traffic. With the recent advancements in previous Cilium releases such as the ability to run the kube-proxy replacement or the standalone load balancer at the XDP layer, one frequent ask we received from our users was whether the XDP acceleration can be used in combination with bonded networking devices. While the Linux kernel supports XDP for the vast majority of 10/40/100 Gbit/s network drivers, it was lacking the ability to transparently operate XDP in bonded (and 802.3ad) modes.

One option to this approach would be to implement 802.3ad in user-space and implement the bonding load-balancing in the XDP program itself, but this turns out to be a rather cumbersome and racy endeavor in terms of bond device management, for example, when watching for netlink link events, and additionally this would require separate programs for native versus bond cases for the orchestrator. Instead, a native in-kernel implementation overcomes these issues, provides more flexibility, and is able to handle eBPF programs without needing to change or recompile them. Given the kernel is in charge of the device group of the bond, it can automatically propagate the eBPF programs. For v5.15 or newer kernels, we have upstreamed (part1, part2) our implementation for XDP bond support.

Semantics of XDP_TX when an XDP program is attached to a bond device are equivalent to the case when a tc eBPF program would be attached to the bond, meaning transmitting the packet out of the bond itself is done by using one of the bond’s configured transmit methods to select a subordinate device. Both fail-over and link aggregation modes can be used under XDP operation. We implemented support for round-robin, active backup, 802.3ad as well as a hash-based device selection for transmitting the packets back out of the bond via XDP_TX. This case is in particular of interest in hair-pinning load-balancers like in the case of Cilium.

Route-based Device Detection

This release significantly improves the automatic detection of devices used by the eBPF-based kube-proxy replacement, bandwidth manager and the host firewall features.

In earlier releases the devices automatically picked by Cilium were the device with the default route and the device with the Kubernetes NodeIP. Going forward, the devices are now picked based on the entries in all routing tables of the host namespace. That is, all non-bridged, non-bonded and non-virtual devices that have global unicast routes on them are now detected.

With this improvement Cilium should now be able to automatically detect the correct devices in more complicated networking setups without the need to specify devices manually with the devices option. This implicitly also removes the need for a consistent device naming convention (e.g. regular expression with a common prefix) when the latter option was used.

Graceful Service Backend Termination

Kubernetes can terminate a service endpoint Pod for a number of reasons like rolling update, scale down, or user initiated deletion. In such cases, it is important that the active service connections to the Pod are gracefully terminated, giving the application time to finish the request in order to minimize disruption. Abrupt connection terminations can lead to data loss, or can delay application recovery.

The Cilium agent listens for service endpoint updates via the EndpointSlice API. When a service endpoint is terminated, Kubernetes sets the terminating state for the endpoint. Cilium agent then removes the datapath state for the endpoint such that the endpoint is not selected for new requests, but existing service connections that this endpoint is servicing can be terminated within a user defined grace period.

In parallel, Kubernetes instructs container runtimes to send the SIGTERM signal to the service Pod containers, and waits for the termination grace period. The container applications can then initiate graceful termination for active connections (e.g., close TCP sockets). Kubernetes finally triggers forceful shutdown via the SIGKILL signal to processes that are still running in the Pod containers once the grace period ends. At this time, the agent also receives a delete event for the endpoint, and it then completely removes the endpoint’s datapath state. However, if the application Pod exits before the graceful termination period ends, Kubernetes will send the delete event immediately, regardless of the grace period.

Follow the guide for more details in docs.cilium.io.

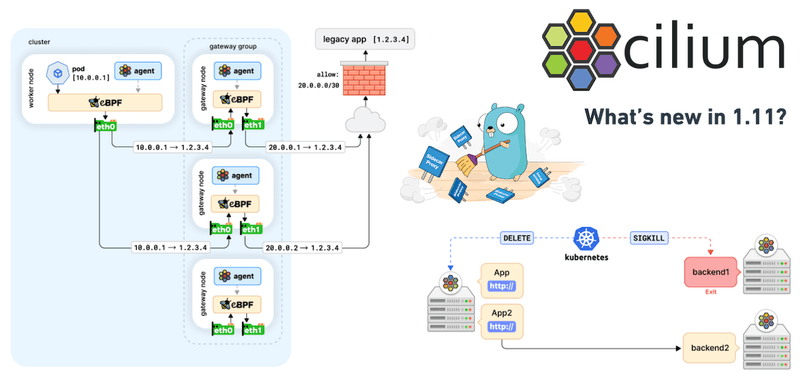

Egress Gateway Improvements

In a simpler world, Kubernetes applications would only communicate with other Kubernetes applications, and their traffic would be controlled via mechanisms such as network policies. In real world setups (e.g., on-prem deployments where a number of applications have not been containerized), this is not always the case, and Kubernetes applications might need to contact services outside the cluster. These legacy services will typically have static IPs and will be protected by static firewall rules. How can traffic be controlled and audited in such a scenario?

The Egress IP Gateway feature, introduced in Cilium 1.10, aims to address the above question by allowing Kubernetes nodes to act as gateways for cluster-egress traffic. Users use policies to prescribe what traffic should be forwarded to the gateway nodes and how it should exit the Kubernetes cluster from there. Using this mechanism, gateway nodes will masquerade traffic using static egress IPs that can be used to build rules for legacy firewalls.

With the sample policy shown above, traffic that matches both the source Pod labelled with app: test-app and destination CIDR 1.2.3.0/24, instead of directly egressing from the node where the client Pod is running, it will leave the cluster via the gateway node with the 20.0.0.1 egress IP (SNAT).

In the 1.11 development cycle, a lot of effort was put into stabilizing the egress gateway feature towards making it production-ready. Egress gateway now works when using direct routing, distinguishes internal traffic which enables egress policies using CIDRs that overlap with Kubernetes addresses, and allows using the same egress IP in different policies. Issues such as reply being mischaracterized as egress traffic and others have been fixed, while testing was also improved so that potential regressions will be caught early.

Kubernetes Cgroup Enhancements

A critical role in Cilium’s eBPF-based kube-proxy replacement as well as standalone load balancer is the ability to attach eBPF programs to socket hooks such as connect(2), bind(2), sendmsg(2) and various other related system calls in order to transparently wire node-local applications to service backends. These programs can only be attached to cgroup v2, and while efforts are well underway to migrate Kubernetes to cgroup v2-only, the vast majority of users are running a mixed mode environment where cgroup v1 and v2 are operated in parallel.

The Linux kernel tags the socket-to-cgroup relationship inside the kernel’s socket object, and due to assumptions that were made around 6 years ago, the socket tag for cgroup v1 versus v2 membership is mutually exclusive. Meaning, if a socket was created with a cgroup v2 membership but at a later point in time got tagged through net_prio or net_cls controllers with a cgroup v1 membership, then the cgroup v2 falls back executing a eBPF program attached to the root of the cgroup v2 hierarchy instead of the one actually attached at the subpath of the Pod. This could lead to bypassing of eBPF programs in the cgroup v2 hierarchy if, for example, no program is attached at the cgroup v2 root.

However, in today’s production environments the assumption that both are not operated in parallel no longer holds true as outlined in our Linux Plumbers conference talk earlier this year. In (only) a few occasions we have seen that third-party agents in the Kubernetes cluster using cgroup v1 networking controllers were stepping over eBPF programs from cgroup v2 membership if the latter were attached to a non-root path in the hierarchy. In order to address this early, we recently fixed the Linux kernel (including stable kernels) to safely allow inter-operation between both flavors (part1, part2) in all situations. This not only makes cgroups operation fully robust and reliable for such corner cases in Cilium but also for all other eBPF cgroup users in Kubernetes in general.

Additionally, Kubernetes and container runtimes like Docker recently announced support for cgroup v2. In this mode, Docker switched to private cgroup namespaces by default, whereby every container (including Cilium) runs in its own private cgroup namespace. We made Cilium’s socket-based load balancing functional in such environments by ensuring that the eBPF programs are attached to the socket hooks at the right cgroup hierarchy.

Improved Load Balancer Scalability

Primary external contributor: Weilong Cui (Google)

For large-scale Kubernetes environments running Cilium with more than 64-thousand Kubernetes endpoints, a scale limit on the service load-balancing backend-side has recently been hit when testing large deployments.

There were two limiting factors:

- Cilium’s eBPF-based kube-proxy replacement’s local backend ID allocator was still limited into 16 bit ID space.

- Cilium’s eBPF datapath backend maps for both IPv4 and IPv6 were using a key type that was limited into 16 bit ID space.

In order to be able to scale beyond 64-thousand endpoints, the ID allocator as well as related datapath structures have been converted over to use 32 bit ID space.

Cilium Endpoint Slices

Primary external contributors: Weilong Cui (Google) and Gobinath Krishnamoorthy (Google)

With the 1.11 release, we are adding support for a new mode of operation that greatly improves Cilium’s ability to scale through a more efficient way of broadcasting Pod information.

Previously, Cilium broadcasted Pod’s IP addresses and security identity information through watching CiliumEndpoint (CEP) objects. This imposes some scalability challenges. The creation/update/deletion of each CEP object would trigger a multicast of watch events that scales linearly with the number of Cilium-agents in the cluster, while each Cilium-agent can trigger such a fan-out action. With N nodes in the cluster, the total watch events and traffic potentially scales quadratically at a rate of N^2.

Cilium 1.11 introduces a new CRD CiliumEndpointSlice (CES) where slim versions of CEPs from the same namespace can be grouped together into CES objects by the operator. Cilium-agents watch CES instead of CEP in this mode. This leads to fewer watch events and less traffic that need to be broadcast from the kube-apiserver, hence greatly reducing kube-apiserver stress and allows Cilium to scale better.

Since the introduction of CEP Cilium has been able to run without a dedicated instance of etcd (KVStore-mode). However, using KVStore was still recommended for clusters with a high Pod churn to offload the amount of processing work done in kube-apiserver into the etcd instance. With the introduction of CES, using a dedicated instance of etcd will not be as critical since CES will also help to reduce the load on the kube-apiserver.

This mode effectively trades off faster endpoint information propagation for better control plane scalability. A potential caveat of running this mode is that it can incur higher delay for endpoint information to propagate to remote nodes at large scales and when the Pod churn rate is relatively high compared to CEP mode.

GKE is an early adopter of this technology. We did some “worst-case” scale testing on GKE and found out that Cilium scales much better in the CES mode. From a 1000-node scale load test, enabling CES reduces the peak watch events from 18k/s for CEP to 8k/s for CES, and the peak watch traffic from 36.6 Mbps for CEP to 18.1Mbps for CES. In terms of controller node resource usage, it reduces the peak CPU usage from 28 cores/s to 10.5 cores/s.

Figure 1. CEP watch events and traffic in a 1000-node scale load test on GKE

Figure 2. CEP and CES watch events and traffic in a 1000-node scale load test on GKE

Follow the guide for more details docs.cilium.io.

Istio Support with Kube-Proxy-Replacement

Many of our users operate Kubernetes without kube-proxy in order to be able to avoid paying the cost of traversing the upper networking stack, and to benefit from a more efficient eBPF-based datapath. Among other advantages, this helps avoid potentially long lists of linearly processed iptables rules installed by kube-proxy and other Kubernetes components.

Handling the Kubernetes service load-balancing in eBPF is architecturally split into two components: Processing of external service traffic coming into the cluster (North/South direction), and processing of internal service traffic coming from within the cluster (East/West direction). With the help of eBPF, the former has been implemented in Cilium to operate on a per-packet basis as close as possible in the driver layer (e.g. via XDP), whereas the latter is operating as close as possible towards the application layer where eBPF directly “wires” requests of the application (e.g. TCP connect(2)) from a service Virtual IP to one of the backend IPs in order to avoid a per-packet NAT translation cost.

This works well for the vast majority of use-cases. However, today’s service mesh solutions such as Istio are still heavily integrated with iptables, installing extra redirection rules in a Pod namespace such that application traffic is first reaching the proxy sidecar before it leaves from the Pod into the host namespace. Proxies like Envoy then query the Netfilter connection tracker directly via SO_ORIGINAL_DST from its internal sockets to gather the original service destination, for example.

This implies that the service traffic processing for Pods (E/W direction) needs to fall back to an eBPF-based DNAT on a per-packet basis, while applications inside the host namespace can still benefit from Cilium’s socket-based load-balancer which avoids the per-packet translations. This release now supports the latter through a new Cilium agent option which can be enabled in the deployment yaml using the Helm setting bpf-lb-sock-hostns-only: true.

Follow the guide for more details in docs.cilium.io.

Mirantis Kubernetes Engine Integration

We’ve added an integration with Mirantis Kubernetes Engine.

Here at Isovalent we work with customers that deploy Kubernetes in every way possible! With this release we have extended both Cilium and Isovalent Cilium Enterprise to integrate with Mirantis Kubernetes Engine. Mirantis Kubernetes Engine was formerly known as Docker Enterprise/UCP and deploys Kubernetes and all of its components in Docker containers, leveraging Swarm to manage cluster membership. This integration is enabled with a new flag in the Cilium Helm chart.

Feature Promotions and Deprecations

The following features have been promoted:

- Host Firewall moves from beta to stable. The host firewall protects the host network namespace by allowing

CiliumClusterwideNetworkPoliciesto select cluster nodes. Since the introduction of the host firewall feature, we’ve significantly increased test coverage and fixed several bugs. We also received feedback from several community users and are satisfied this feature is ready for production clusters.

The following features have been deprecated:

- Consul as a kvstore backend is now deprecated in favor of the more well-tested Etcd and Kubernetes backends. Consul has been primarily used previously by Cilium developers for local end-to-end testing, but during recent development cycles this testing has been performed directly through Kubernetes by using the Kubernetes kvstore backend instead.

- IPVLAN support was previously offered as an alternative to the use of virtual ethernet (veth) device support for providing network connectivity from nodes into the Pods on the node. As described in recent blog posts around the eBPF host routing feature, improvements to the Linux kernel which were driven by the Cilium community have allowed veth support to reach comparable performance to the IPVLAN approach.

- Policy Tracing via the

cilium policy tracein-Pod command-line tool had been used by Cilium users during early Cilium releases, however over time it has not kept pace with the feature progression of the Cilium policy engine. Recent tools such as the Network Policy Editor and Policy Verdicts support now provide alternative ways to reason about the policy decisions that Cilium makes.

Isovalent Cilium Enterprise 1.11

The features listed below are additions to Isovalent Cilium Enterprise 1.11. All features will also be available in the next version of Isovalent Cilium Enterprise 1.10.x.

Timescape (Enterprise)

Timescape is a new addition to Isovalent Cilium Enterprise that introduces a time-series database using ClickHouse to the Cilium ecosystem. It can be fed by Cilium and Hubble observability to store all observability data persistently. You can then run all of the Hubble tooling (UI, CLI, API) to extract observability data and use it as a time machine for all observability data.

Timescape can store a variety of Cilium & Hubble related observability data:

- Network flow logs at L3-L4 with rich Kubernetes metadata and in-depth network protocol insights

- L7 tracing data for protocols such HTTP, gRPC, memcached, Kafka, and more

- Runtime behavior of applications (syscalls, kprobes, file opens, privilege escalation, …)

- Security events (NetworkPolicy, Drops, Runtime enforcement violations, …)

- Kubernetes object history & audit events (pod deploys, service deletions, scale up/down, …)

All of this data can be accessed via Hubble UI, the Hubble CLI, or a powerful query API to run analytics workflows. Here is an example of showing runtime visibility data by connecting the user interface of Isovalent Hubble Enterprise to Timescape:

HA for FQDN & Egress IP (Enterprise)

Enterprise environments require additional redundancy and high availability of the Egress Gateway feature, in order for the cluster to tolerate node failures. To this end, the Isovalent Cilium Enterprise 1.11 release and next release of 1.10.x will bring initial support for Egress Gateway High Availability (HA), allowing an egress NAT policy to select a group of gateway nodes instead of a single one.

Multiple nodes acting as egress gateways will make it possible to both distribute traffic across different gateways and to have additional gateways in a hot standby setup, ready to take over in case an active gateway fails.

Here’s an example of a HA egress NAT policy:

In this example the policy will match all traffic originating from Pods labeled with app: test-app and destined to the 1.2.3.0/24 CIDR, forwarding that traffic to a healthy gateway node labeled with egress-group: egress-group-1. Next, the gateway will SNAT said traffic with the IP assigned to its eth1 interface, and then it will forward it to the destination. Lastly, the maxGatewayNodes: 2 property will ensure that at most 2 gateway nodes will be active at the same time, while all the eventual remaining ones will be ready to take over in case any of the active gateway fails.

eBPF-based L7 Tracing & Metrics without Sidecars (Enterprise)

Native eBPF-based HTTP, DNS, & TLS visibility is becoming available in Isovalent Cilium Enterprise 1.11 and the next 1.10.x releases. The features provides a high-performance variant to the existing L7 visibility and runs entirely in-kernel using eBPF protocol parsers that no longer need to terminate connections or inject proxies. These accelerated protocol parsers providing L7 visibility are ideal for enterprise environments that are not in a position to tolerate the additional latency introduced by proxy-based architectures.

In the benchmark below, we see initial measurements of how implementing HTTP visibility with eBPF or a sidecar approach affects latency. The setup is running a steady 10K HTTP requests per second over a fixed number of connections between two pods running on two different nodes and measures the mean latency for the requests.

The visibility is exported in the known Hubble and OpenTelemetry format and can also be exported into any SIEM via the existing integrations. For more information on this topic, see How eBPF will solve Service Mesh – Goodbye Sidecars.

Getting Started

If you are interested to get started with Cilium, use one of the resources below:

- Learn more about Cilium (Introduction, tutorials, AMAs, installfests, …)

- Getting Started Guides

- Upgrade guide

- Join Cilium Slack to interact with the Cilium community

Further Reading

- 1.11 Release Notes

- How eBPF will solve Service Mesh – Goodbye Sidecars

- Cilium 1.10: WireGuard, BGP Support, Egress IP Gateway, New Cilium CLI, XDP Load Balancer, Alibaba Cloud Integration and more

- Cilium 1.9: Maglev, Deny Policies, VM Support, OpenShift, Hubble mTLS, Bandwidth Manager, eBPF Node-Local Redirect, Datapath Optimizations, and more